In recent months, artificial intelligence has grown more powerful than ever, particularly through the app Sora AI, which has sparked widespread controversies as it has slowly infiltrated our feeds. One of its most alarming capabilities is the creation of deepfakes featuring well-known celebrities, such as influencer and boxer Jake Paul. These videos portray him speaking and behaving in ways he never has with a startling amount of realism. While viewers may recognize them as fake, the quality is almost convincing enough to pass as real.

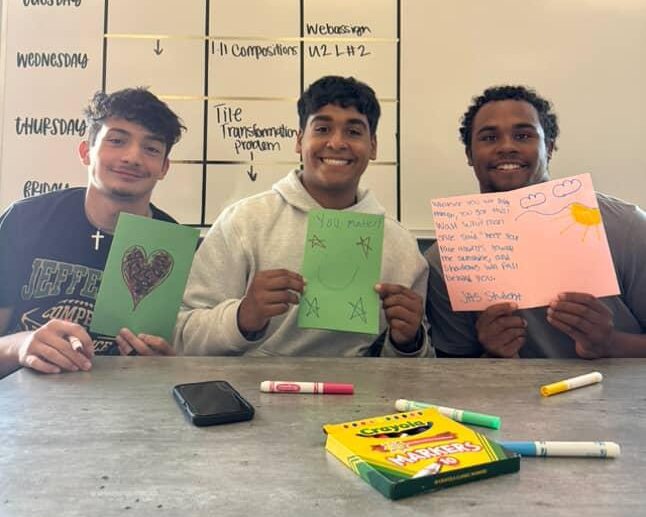

“I don’t really mind AI,” says Kyle Ortega, a senior at Jefferson High School. “It doesn’t involve me, and you can always tell if something is AI or not.” But what happens when that’s no longer true? What’s surprising is how subtly AI has crept into the social consciousness. I’ve personally come across clips that, at the moment, feel real until I open up the comments and see that it’s fake. It’s getting harder to spot the difference between real and fabricated content.

Celebrities are often the first targets, but everyday people could be next and have their faces used in videos without permission—maybe even used in legal proceedings.

In May 2025, a family presented an AI video in court as a part of an impact statement for a man killed in a road rage incident. Experts state that it’s the first time that technology has been used to create a statement rendered by AI. In this case, technology served to humanize a victim. But not all uses may be as compassionate as this. With the wrong hands, AI could become a tool of manipulation and power since it doesn’t require high-tech equipment.

For now, Sora AI remains behind the scenes. But as it continues to evolve, creators and viewers alike may need to think about what’s real and what might be a refined version of AI.